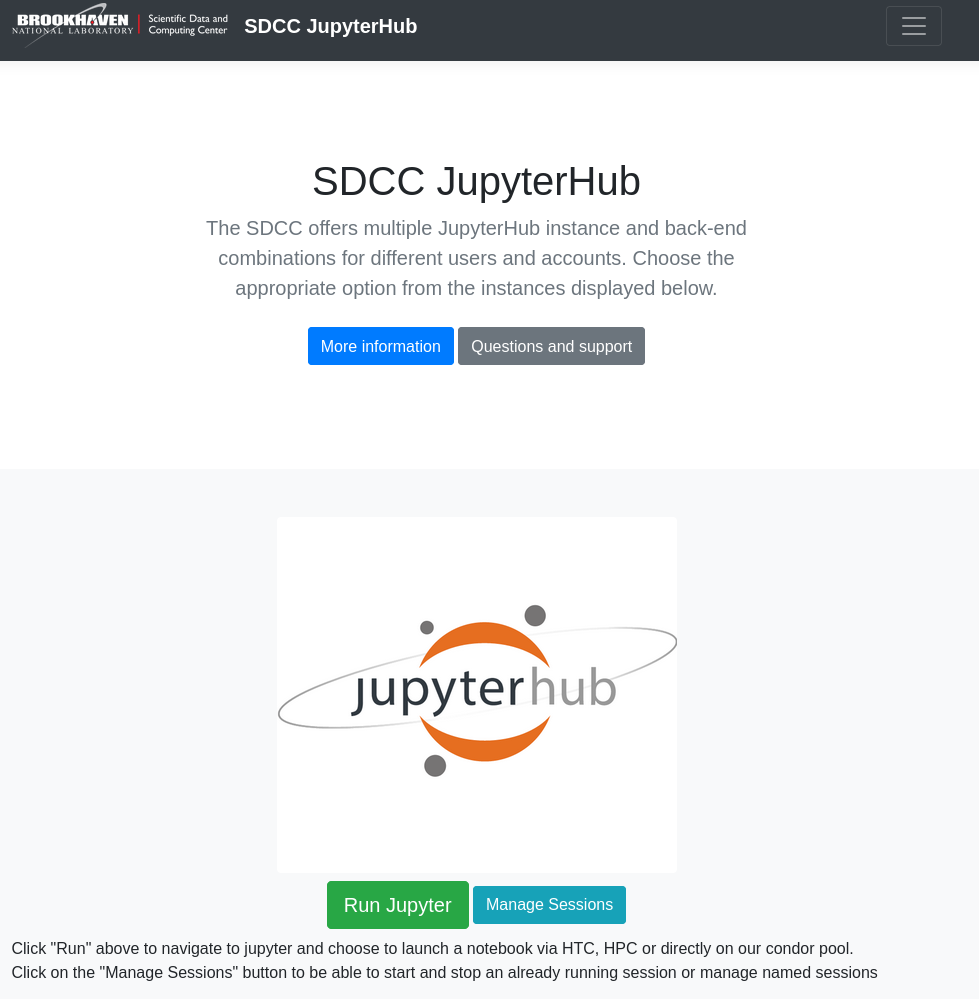

The portal is accessed through a load-balancing web proxy located at https://jupyter.sdcc.bnl.gov/. The proxy offers redirection to multiple entry points depending on the resources required. Currently, there are two types of entry points:

- HTC: ("High Throughput Computing") Provides access to the SDCC compute farm, including HTCondor shared pool queues.

- HPC: ("High Performance Computing") Provides access to GPU computing resources on the Institutional Cluster or the KNL Cluster via Slurm.

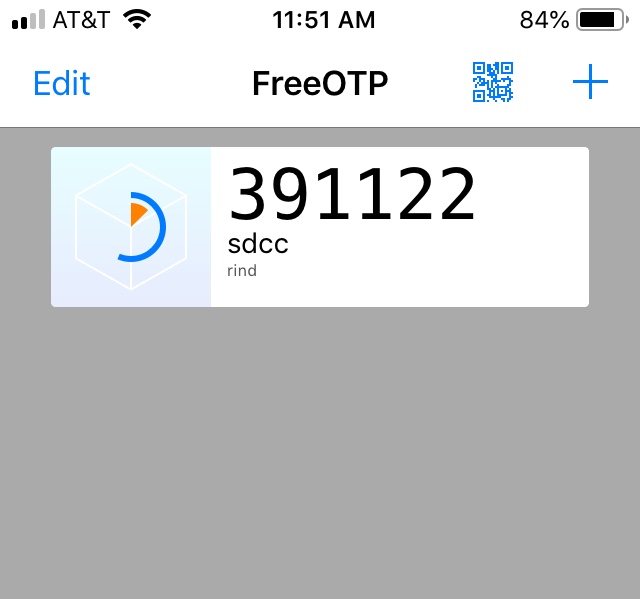

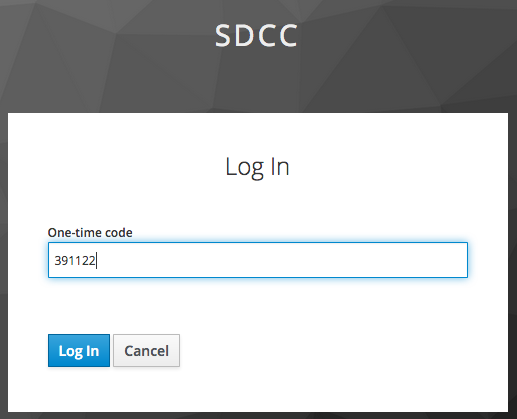

Selecting either the "Run Jupyter" or "Manage Sessions" button will redirect users to a login page. Login requires a standard SDCC username and password. However, because these servers provide interactive access to the SDCC, two-factor authentication is necessary. Once a correct username and password are entered, the user's identity is further verified by requesting a one-time password code. The procedure requires a smartphone using either the FreeOTP or Google Authenticator app. For users without a smartphone there is an alternative method using a Chrome browser extension with Google Authenticator. When logging in for the first time, users will be presented with a QR code that can be used to link their account with either app. Once the account is linked, subsequent logins will request a one-time code, provided by the app:

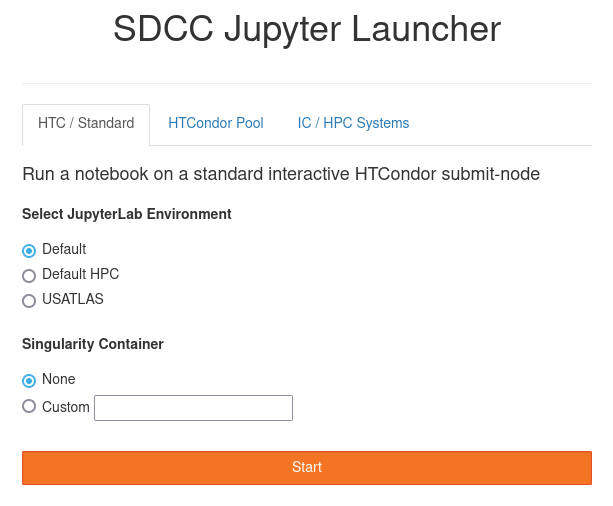

Once the code is entered successfully, the hub will present a form where the user can choose to launch a session on our HPC resources, our HTC submit nodes (called "standard") or if need be directly on our HTC farm

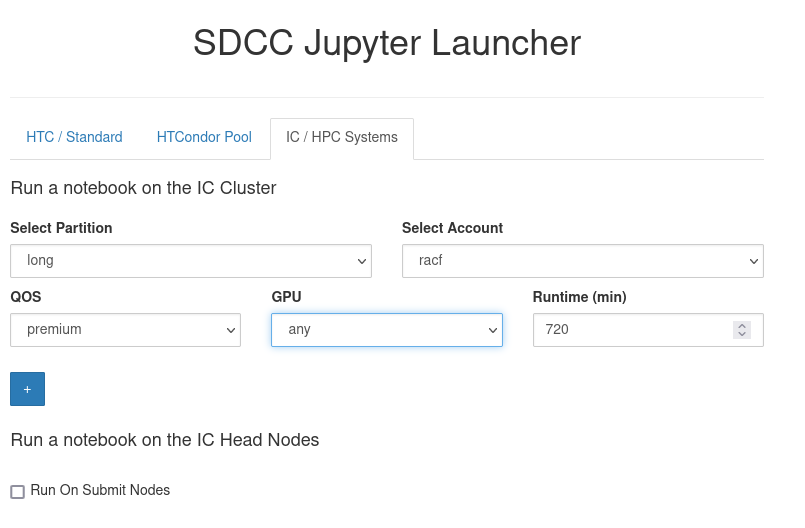

To run a Jupyter session on the IC, users must select a valid partition and account, as well as the GPU type and the wall clock limit for their job. The form will only show options that are available to the user, so may look different than depicted above. In addition, once a partition is selected, the form will automatically offer parameter choices limited to that partition. Users can also check the "Run On Submit Nodes" button to launch a notebook on the cluster head node and submit further work to the cluster from there (via Dask for example)

Once the resource request has been input, users can click "Spawn" to launch their notebook server. Note that the IC is a shared resource, so if your request exceeds currently available resources, you will be placed in a wait queue. Alternatively, you can also run "locally" on a Jupyterhub node by checking the small box in the lower left corner. This will provide limited compute resources, but you will have access to Slurm commands that allow you to submit jobs from that host.