By Costin Caramarcu |

About the Institutional Cluster gen 2(IC) at the SDCC.

Prerequisites:

- Have a valid account with the SDCC

- Apply here

- Have a valid account in slurm

- Your liaison should contact us with your name or user id via the ticketing system

Cluster Information:

The cluster consists of:

- 39 CPU worker nodes

- 12 4xA100-SXM4 GPU nodes

- 1 2xA100 80GB PCIe node

- 1 2xA100 40GB PCIe node

- 1 submit node

- 2 master nodes

The CPU nodes details:

- Supermicro SYS-610C-TR

- Intel(R) Xeon(R) Gold 6336Y CPU @ 2.40GHz

- NUMA node0 CPU(s): 0-23

- NUMA node1 CPU(s): 24-47

- Thread(s) per core: 1

- Core(s) per socket: 24

- Socket(s): 2

- NUMA node(s): 2

- 512 GB Memory

- InfiniBand NDR200 connectivity

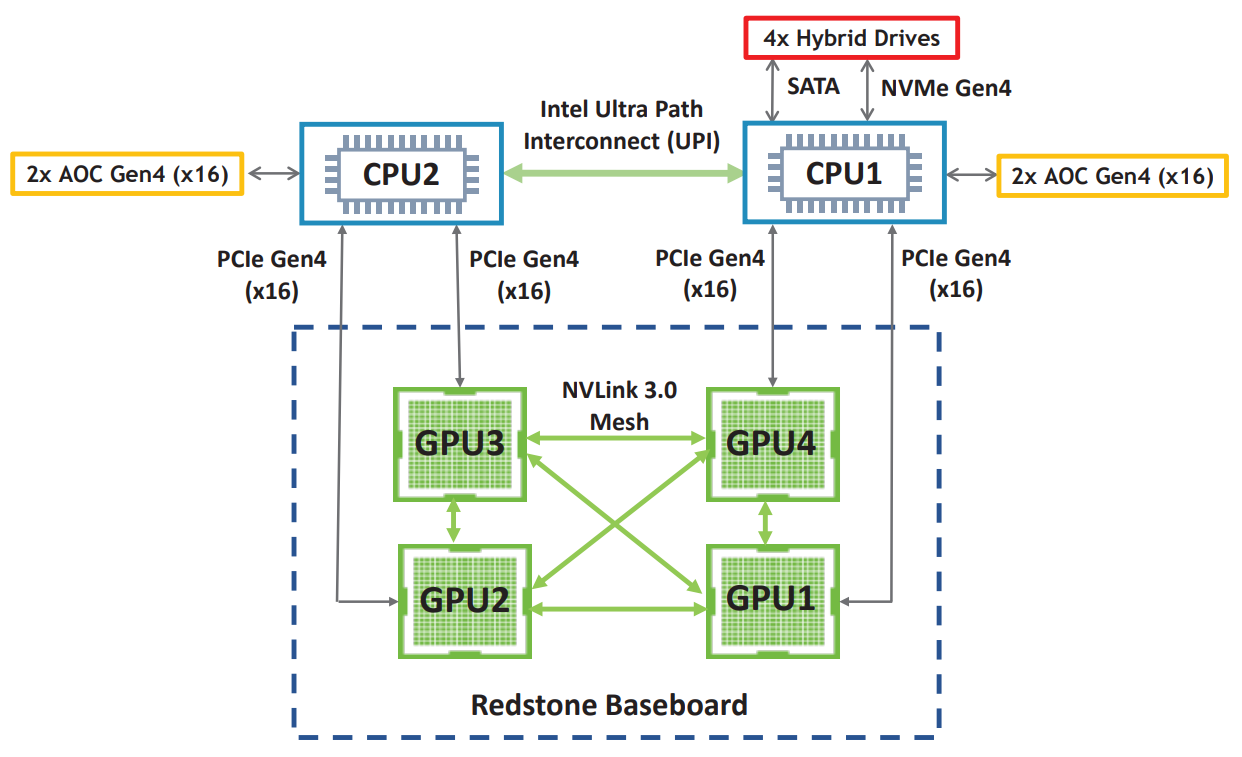

The GPU A100-SXM4 nodes details:

- Supermicro SYS-220GQ-TNAR+

- Intel(R) Xeon(R) Gold 6336Y CPU @ 2.40GHz

- NUMA node0 CPU(s): 0-23

- NUMA node1 CPU(s): 24-47

- Thread(s) per core: 1

- Core(s) per socket: 24

- Socket(s): 2

- NUMA node(s): 2

- 1 TB Memory

- 4x A100-SXM4-80GB

- InfiniBand NDR200 connectivity

The GPU 2xA100 (80/40)GB PCIe nodes details(debug partition):

- Supermicro SYS-120GQ-TNRT

- Intel(R) Xeon(R) Gold 6336Y CPU @ 2.40GHz

- NUMA node0 CPU(s): 0-23

- NUMA node1 CPU(s): 24-47

- Thread(s) per core: 1

- Core(s) per socket: 24

- Socket(s): 2

- NUMA node(s): 2

- 512 GB Memory

- 2xA100 (80/40)GB (amperehost01/amperehost02)

- InfiniBand NDR200 connectivity

Storage:

- 1.9 TB of local disk storage per node

- 1 PB of GPFS distributed storage

- Cluster Storage

Partitions:

| partition | time limit | allowed qos | default time | preempt mode | CPU nodes | GPU nodes | user availability |

|---|---|---|---|---|---|---|---|

| debug | 30 minutes | normal | 5 minutes | off | 0 | 2 | ic |

| cfn | 24 hours | cfn | 5 minutes | off | 35 | 8 | cfn only |

| csi | 24 hours | csi | 5 minutes | off | 0 | 4 | csi only |

| lqcd | 24 hours | lqcd | 5 minutes | off | 4 | 0 | lqcd only |

Limits:

- Each user can submit a maximum of 50 jobs

- The maximum number of nodes running jobs for an account varies with the size of the allocation.

Software

The institutional cluster offers software via module command and software librarians (of various groups) can install additional software as needed.